Xuanchen LuHi! I am a Ph.D. student at Cornell Tech, advised by Prof. Andrew Owens. My research focuses on the intersection of computer vision and human perception. Before starting my Ph.D., I was fortunate to be advised by Prof. Xiaolong Wang at UC San Diego and Prof. Judith Fan at Stanford University. I received my M.S. in Computer Science from UC San Diego, where I also earned a B.S. in Computer Science, Mathematics, and Cognitive Science (minor). Email / Google Scholar / GitHub / Twitter |

|

ResearchIn early infancy, babies learn to track moving objects, attend to improbable events, and form core concepts such as object permanence and solidity. Through this process, they acquire knowledge about the categories, functions, motion, and intuitive physics of elements in the environment. Can artificial vision systems achieve a comparable level of visual understanding by observing the world through videos? This question naturally involves learning world models from continuous visual observations with minimal supervision. My research interests reside at the intersection of self-supervised learning from videos, understanding structure and dynamics of the physical world, and human visual perception (e.g., visual abstraction). |

|

(* indicates equal contribution) |

|

SEVA: Leveraging sketches to evaluate alignment between human and machine visual abstractionKushin Mukherjee*, Holly Huey*, Xuanchen Lu*, Yael Vinker, Rio Aguina-Kang, Ariel Shamir, Judith E Fan Advances in Neural Information Processing Systems (NeurIPS), Datasets and Benchmarks Track, 2023 arXiv / website / video / code / dataset / |

|

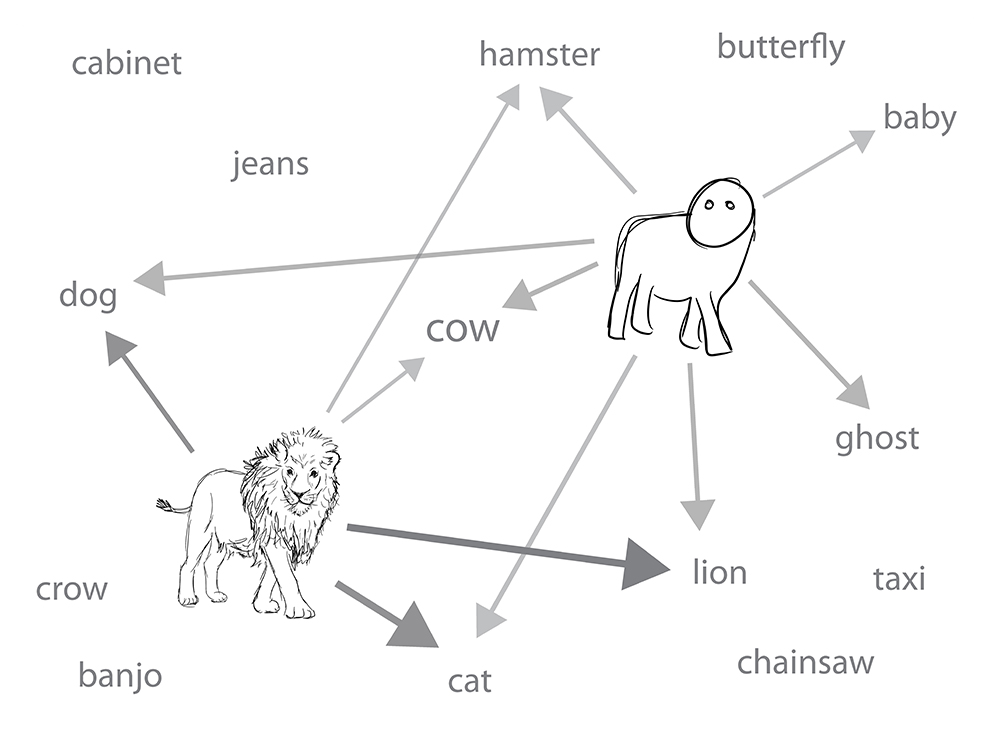

Learning Dense Correspondences between Photos and SketchesXuanchen Lu, Xiaolong Wang, Judith E Fan International Conference on Machine Learning (ICML), 2023 arXiv / website / code / dataset / |

|

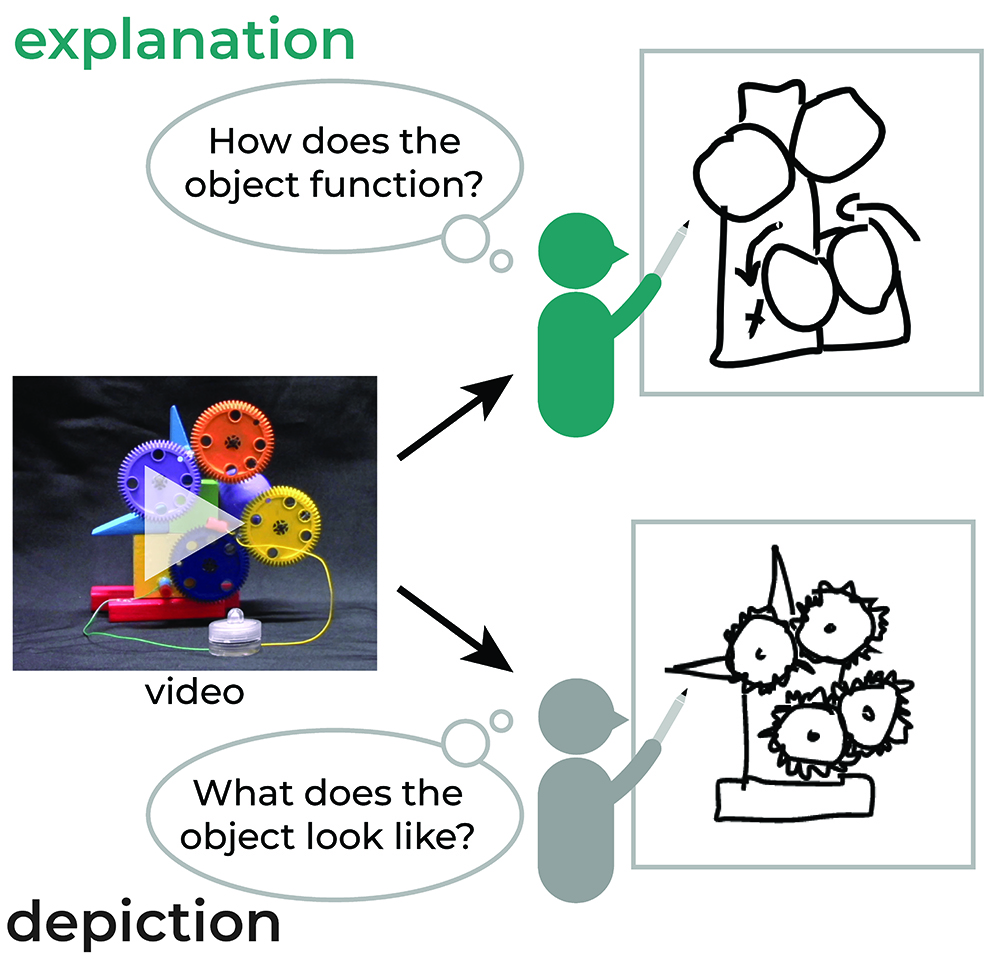

Visual explanations prioritize functional properties at the expense of visual fidelityHolly Huey, Xuanchen Lu, Caren M Walker, Judith E Fan Cognition, 2023 paper / journal / dataset / |

|

Evaluating machine comprehension of sketch meaning at different levels of abstractionKushin Mukherjee, Xuanchen Lu, Holly Huey, Yael Vinker, Rio Aguina-Kang, Ariel Shamir, Judith E Fan Proceedings of the 45th Annual Conference of the Cognitive Science Society (CogSci), 2023 paper / dataset / |

Evaluating machine comprehension of sketch meaning at different levels of abstractionXuanchen Lu, Kushin Mukherjee, Rio Aguina-Kang, Holly Huey, Judith E Fan Journal of Vision, 2023 abstract / |

|

Design and source code from Jon Barron's website |